SIGGRAPH Asia Poster 2024

DualAvatar: Robust Gaussian Splatting Avatar with Dual Representation

Jinsong Zhang1,2, I-Chao Shen2, Jotaro Sakamiya 2, Yu-Kun Lai3, Takeo Igarashi2*, Kun Li1*

1 Tianjin University 2 The University of Tokyo 3 Cardiff University

* Corresponding author

[Paper] [Poster]

Abstract

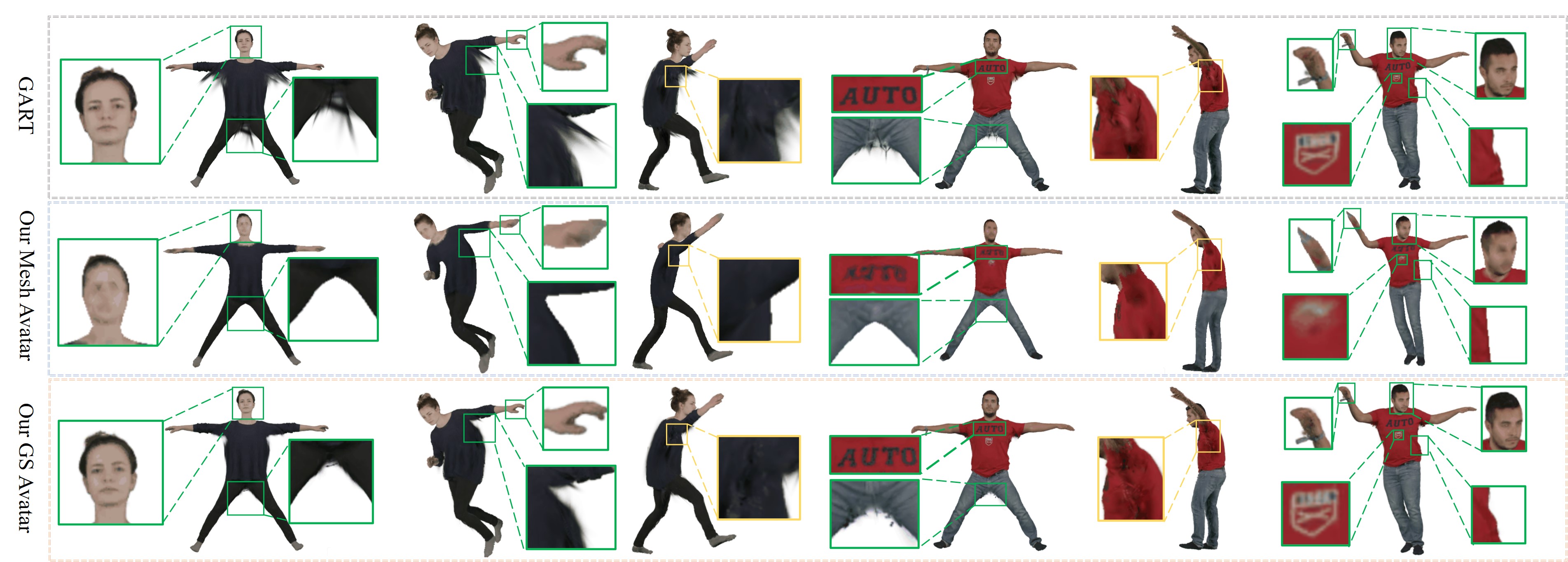

Recent 3D avatar reconstructed by 3D Gaussian Splatting (3DGS) achieves high-quality results on known poses but produce artifacts for unseen poses. To address this issue, we propose DualAvatar, a robust avatar reconstruction method for reconstructing and rendering unseen poses more accurately from monocular video. Our method achieves this by simultaneously optimizing a 3DGS avatar and a mesh avatar. During training, the mesh avatar constrains the unseen regions of the 3DGS avatar, while the 3DGS avatar provides geometry information of the mesh avatar. Experimental results on the PeopleSnapshot dataset demonstrate the superior performance of DualAvatar.

Method

Fig. 1. The overview of our method.

Demo Video

Citation

Jinsong Zhang, I-Chao Shen, Jotaro Sakamiya, Yu-Kun Lai, Takeo Igarashi, Kun Li. "DualAvatar: Robust Gaussian Splatting Avatar with Dual Representation". ACM SIGGRAPH Asia 2024 Posters.

@incollection{zhang2024dualavatar,

author = {Jinsong Zhang and I-Chao Shen and Jotaro Sakamiya and Yu-Kun Lai and Takeo Igarashi and Kun Li},

title = {DualAvatar: Robust Gaussian Splatting Avatar with Dual Representation},

booktitle={ACM SIGGRAPH 2024 Posters},

year={2024},

}