IEEE T-CSVT 2024

High-Quality Animatable Dynamic Garment Reconstruction from Monocular Videos

Xiongzheng Li1, Jinsong Zhang1, Yu-Kun Lai2, Jingyu Yang1, Kun Li1*

1 Tianjin University 2 Cardiff University

* Corresponding author

[Code] [Paper] [Arxiv]

Abstract

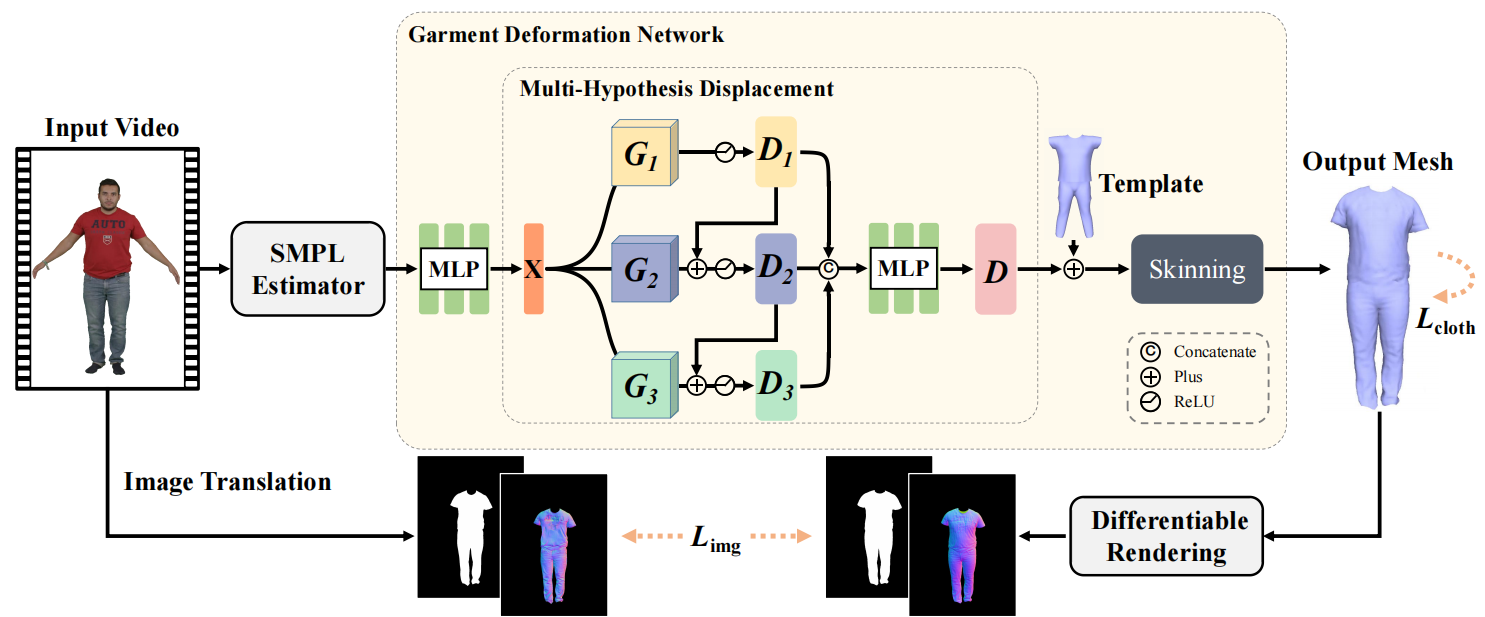

Much progress has been made in reconstructing garments from an image or a video. However, none of existing works meet the expectations of digitizing high-quality animatable dynamic garments that can be adjusted to various unseen poses. In this paper, we propose the first method to recover high-quality animatable dynamic garments from monocular videos without depending on scanned data. To generate reasonable deformations for various unseen poses, we propose a learnable garment deformation network that formulates the garment reconstruction task as a pose-driven deformation problem. To alleviate the ambiguity estimating 3D garments from monocular videos, we design a multi-hypothesis deformation module that learns spatial representations of multiple plausible deformations. Experimental results on several public datasets demonstrate that our method can reconstruct high-quality dynamic garments with coherent surface details, which can be easily animated under unseen poses. The code will be provided for research purposes.

Method

Fig 1. Method overview.

Demo

Results

Application

Technical Paper

Citation

Xiongzheng Li, Jinsong Zhang, Yu-Kun Lai, Jingyu Yang, Kun Li. "High-Quality Animatable Dynamic Garment Reconstruction from Monocular Videos". IEEE Transactions on Circuits and Systems for Video Technology, 2024.

@article{li2024tcsvt,

author = {Xiongzheng Li and Jinsong Zhang and Yu-Kun Lai and Jingyu Yang and Kun Li},

title = {High-Quality Animatable Dynamic Garment Reconstruction from Monocular Videos},

journal = {IEEE Transactions on Circuits and Systems for Video Technology},

volume= {34},

number= {6},

pages= {4243-4256},

year= {2024},

}