Abstract

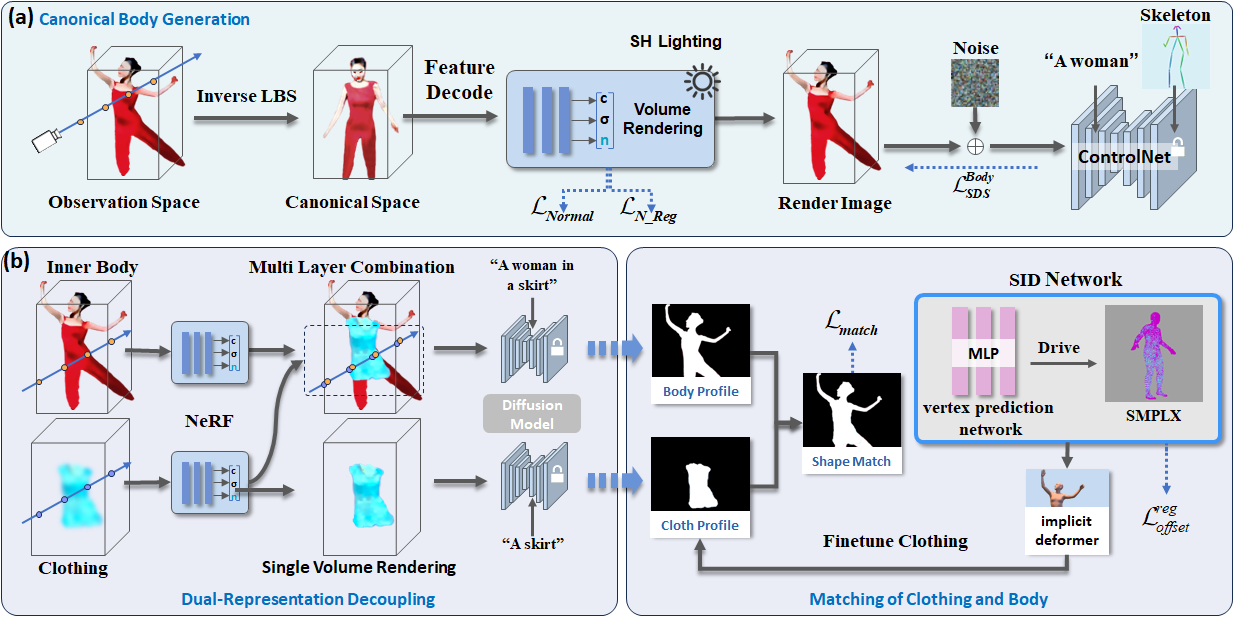

This paper aims to generate physically-layered 3D humans from text prompts. Existing methods either generate 3D clothed humans as a whole or support only tight and simple clothing generation, which limits their applications to virtual try-on and part-level editing. To achieve physically-layered 3D human generation with reusable and complex clothing, we propose a novel layer-wise dressed human representation based on a physically-decoupled diffusion model. Specifically, to achieve layer-wise clothing generation, we propose a dual-representation decoupling framework for generating clothing decoupled from the human body, in conjunction with an innovative multi-layer fusion volume rendering method. To match the clothing with different body shapes, we propose an SMPL-driven implicit field deformation network that enables the free transfer and reuse of clothing. Extensive experiments demonstrate that our approach not only achieves state-of-the-art layered 3D human generation with complex clothing but also supports virtual try-on and layered human animation.

Method

Fig 1. The overview of our framework.

Demo

Technical Paper

Citation

Yi Wang, Jian Ma, Ruizhi Shao, Qiao Feng, Yu-Kun Lai, Kun Li. "HumanCoser: Layered 3D Human Generation via Semantic-Aware Diffusion Model". In Proc. ISMAR, 2024.

@inproceedings{HumanCoser,

author = {Yi Wang and Jian Ma and Ruizhi Shao and Qiao Feng and Yu-Kun Lai and Kun Li},

title = {HumanCoser: Layered 3D Human Generation via Semantic-Aware Diffusion Model},

booktitle = {Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

year={2024}

}