Pose-Guided Attention Network for Human Pose Transfer

Kun Li1, Jinsong Zhang1, Yebin Liu2, Yukun Lai3, Qionghai Dai2

1 Tianjin University 2 Tsinghua University 3 Cardiff University

Abstract

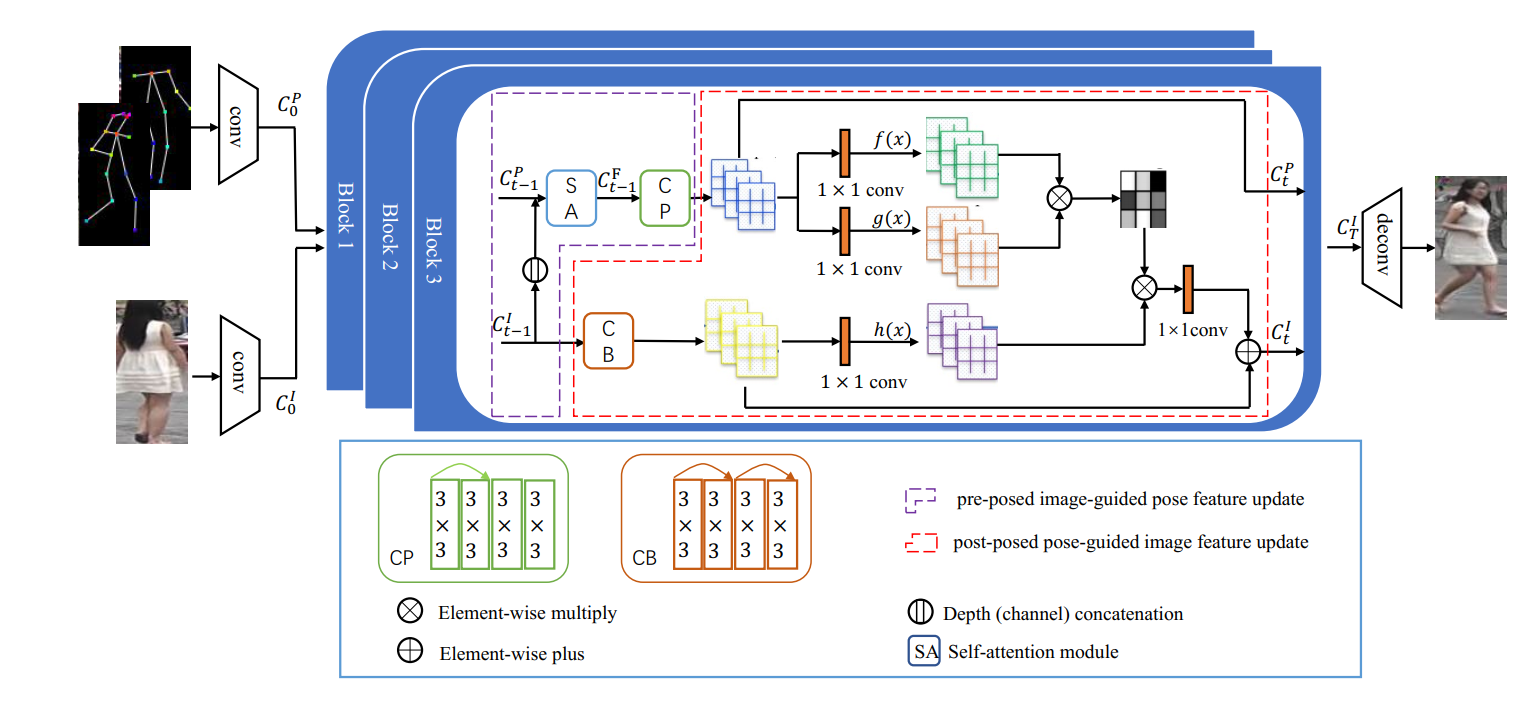

Human pose transfer, which aims at transferring the appearance of a given person to a target pose, is very challenging and important in many applications. Previous work ignores the guidance of pose features or only uses local attention mechanism, leading to implausible and blurry results. We propose a new human pose transfer method using a generative adversarial network (GAN) with simplified cascaded blocks. In each block, we propose a pose-guided non-local attention (PoNA) mechanism with a long-range dependency scheme to select more important regions of image features to transfer. We also design pre-posed image-guided pose feature update and post-posed pose-guided image feature update to better utilize the pose and image features. Our network is simple, stable, and easy to train. Quantitative and qualitative results on Market-1501 and DeepFashion datasets show the efficacy and efficiency of our model. Compared with state-of-the-art methods, our model generates sharper and more realistic images with rich details, while having fewer parameters and faster speed. Furthermore, our generated images can help to alleviate data insufficiency for person re-identification.

[Code] [Paper]

Fig 1. Method overview.

Results

Fig 2. Comparsion with serveral state-of-the-art methods on Deepfashion dataset.

Technical Paper

Citation

Kun Li, Jinsong Zhang, Yebin Liu, Yu-kun Lai, Qionghai Dai. "PoNA: Pose-guided Non-local Attention for Human Pose Transfer". IEEE Transactions on Image Processing 2020

@ARTICLE{9222550,,

author={Li, Kun and Zhang,Jinsong and Liu, Yebin and Lai, Yu-kun and Dai, Qionghai},

journal={IEEE Transactions on Image Processing},

title={{P}o{NA}: Pose-Guided Non-Local Attention for Human Pose Transfer},

year={2020},

volume={29},

pages={9584-9599},

}