AAAI 2025 Oral

InterCoser: Interactive 3D Character Creation with

Disentangled Fine-Grained Features

Yi Wang1†, Jian Ma1†, Zhuo Su2, Guidong Wang2, Jingyu Yang1, Yu-Kun Lai3, Kun Li1*

1 Tianjin University 2 ByteDance China 3 Cardiff University

† Equal contribution * Corresponding author

[Paper] [Supplemental] [Code]

Abstract

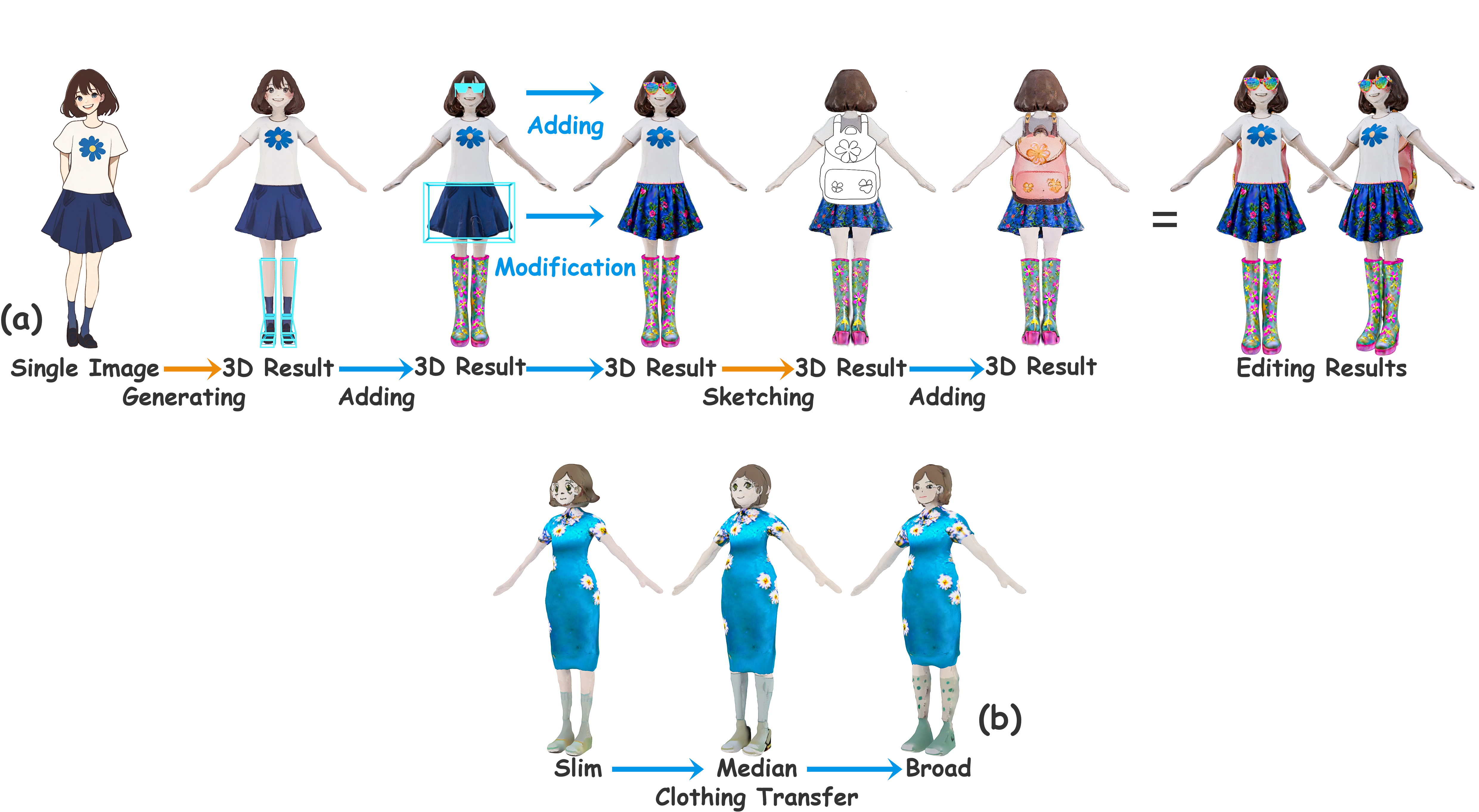

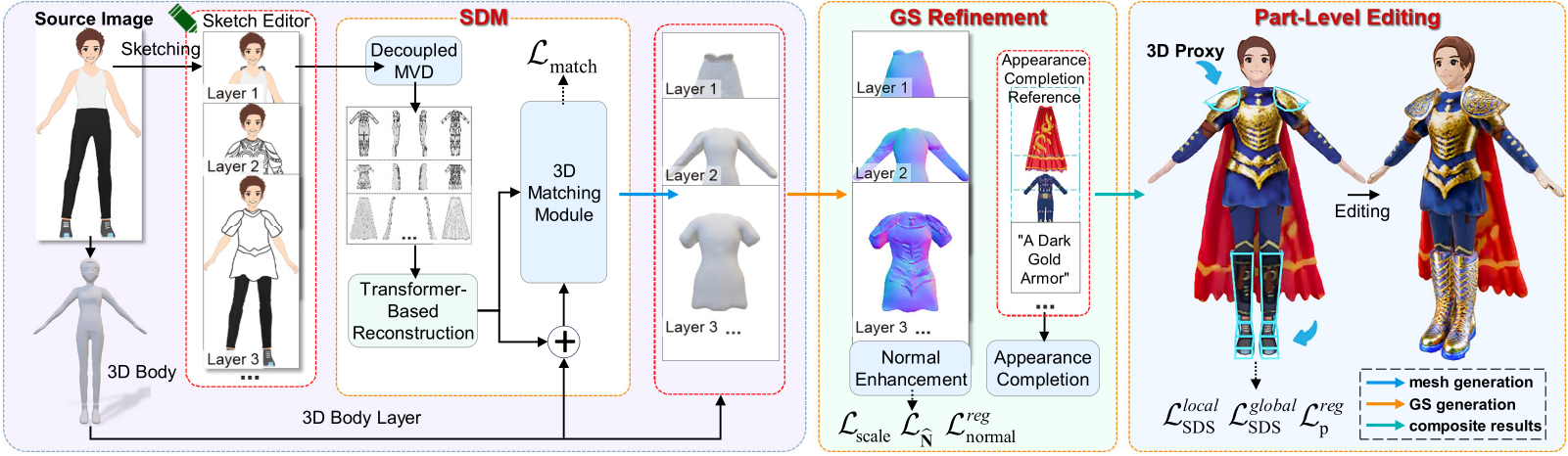

This paper aims to interactively generate and edit disentangled 3D characters based on precise user instructions. Existing methods generate and edit 3D characters via rough and simple editing guidance and entangled representations, making it difficult to achieve precise and comprehensive control over fine-grained local editing and free clothing transfer for characters. To enable accurate and intuitive control over the generation and editing of high-quality 3D characters with freely interchangeable clothing, we propose a novel user-interactive approach for disentangled 3D character creation. Specifically, to achieve precise control over 3D character generation and editing, we introduce two user-friendly interaction approaches: a sketch-based layered character generation/editing method, which supports clothing transfer; and a 3D-proxy-based part-level editing method, enabling fine-grained disentangled editing. To enhance 3D character quality, we propose a 3D Gaussian reconstruction strategy guided by geometric priors, ensuring that 3D characters exhibit detailed local geometry and smooth global surfaces. Extensive experiments on both public datasets and in-the-wild data demonstrate that our approach not only generates high-quality disentangled 3D characters but also supports precise and fine-grained editing through user interaction.

Method

Fig 1. The overview of our framework.

Demo

Technical Paper

Citation

Yi Wang, Jian Ma, Zhuo Su, Guidong Wang, Jingyu Yang, Yu-Kun Lai, Kun Li. "InterCoser: Interactive 3D Character Creation with Disentangled Fine-Grained Features". AAAI 2025 Oral, 2025.

@inproceedings{InterCoser,

author = {Yi Wang and Jian Ma and Zhuo Su and Guidong Wang and Jingyu Yang and Yu-Kun Lai and Kun Li},

title = {InterCoser: Interactive 3D Character Creation with Disentangled Fine-Grained Features},

booktitle = {AAAI 2025 Oral},

year={2025}

}