SIGGRAPH Asia 2025 Technical Communications

DreamCoser: Controllable Layered 3D Character

Generation and Editing

Yi Wang1†, Jian Ma1†, Zhuo Su2, Guidong Wang2, Yu-Kun Lai3, Kun Li1*

1 Tianjin University 2 ByteDance China 3 Cardiff University

† Equal contribution * Corresponding author

[Paper] [Supplemental]

Abstract

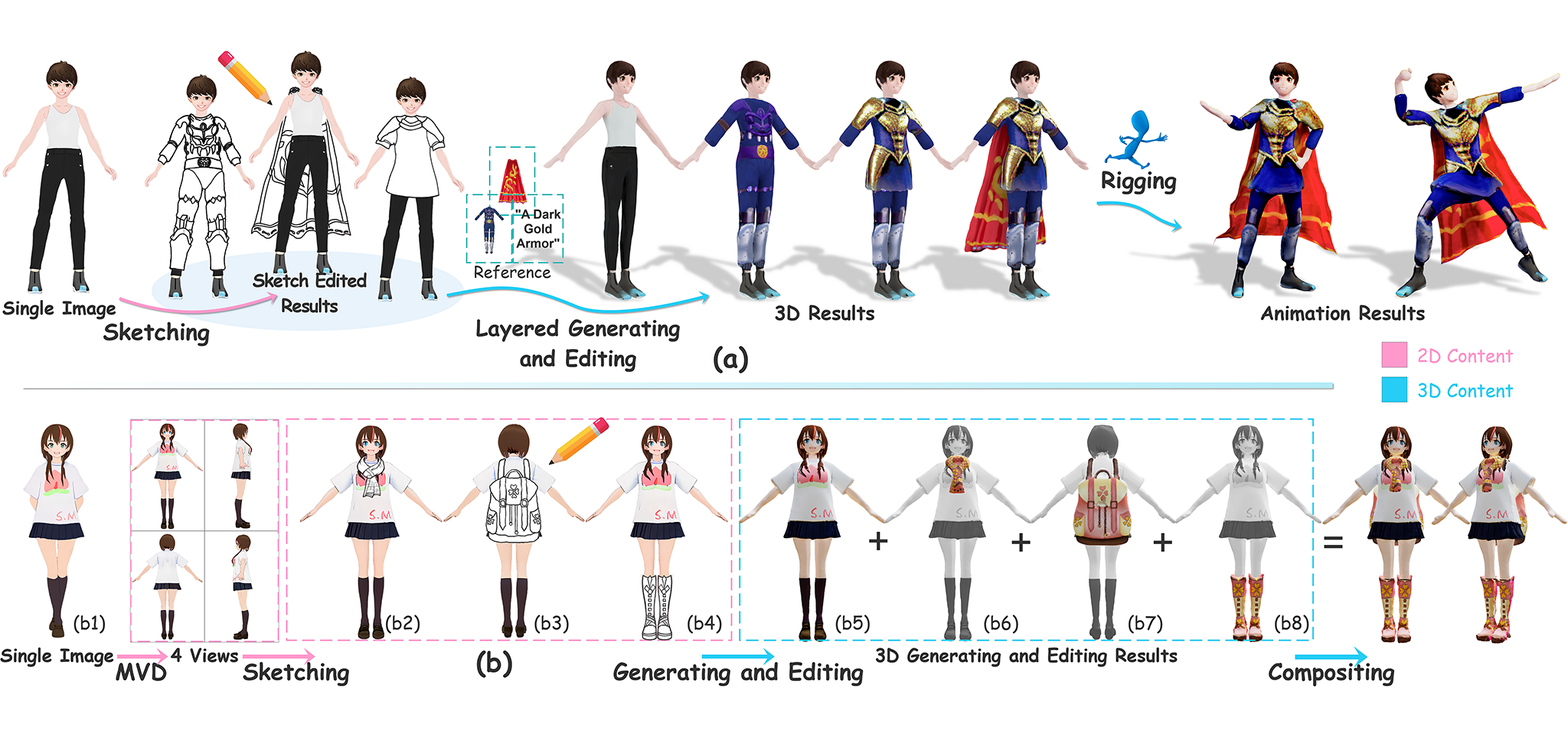

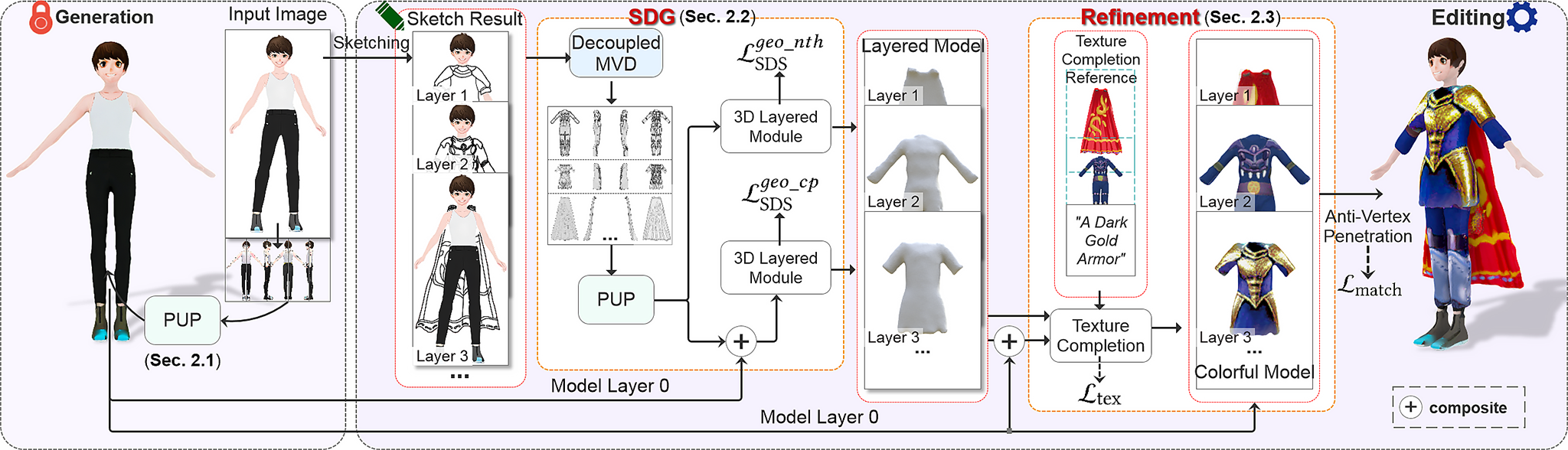

This paper aims to controllably generate and edit layered 3D characters based on hand-drawing. Existing methods either generate 3D characters through global optimization or coupled representations, which makes it difficult to perform fine-grained local editing or change clothing for the character. To achieve controllable generation and part-level editing of high-quality 3D characters with changeable clothing, we propose an innovative layered 3D character generation and editing method based on sketches. Specifically, to achieve controllable generation and part-level editing in a layered manner, we propose an innovative sketch-to-3D decoupled generation network for fine-grained character generation and editing. To ensure the generated character model contains fine textures and complex geometric structures, we propose a sketch-aware progressive upsampling module to achieve high-quality character appearance. Extensive experiments on public datasets and even in-the-wild data demonstrate that our approach not only generates high-quality layered 3D characters but also enables fine-grained local editing through hand-drawn sketches.

Method

Fig 1. The overview of our framework.

Demo

Technical Paper

Citation

Yi Wang, Jian Ma, Zhuo Su, Guidong Wang, Yu-Kun Lai, Kun Li. "DreamCoser: Controllable Layered 3D Character Generation and Editing". SIGGRAPH Asia 2025 Technical Communications, 2025.

@inproceedings{DreamCoser,

author = {Yi Wang and Jian Ma and Zhuo Su and Guidong Wang and Yu-Kun Lai and Kun Li},

title = {DreamCoser: Controllable Layered 3D Character Generation and Editing},

booktitle = {SIGGRAPH Asia 2025 Technical Communications},

year={2025}

}