Graph-based Segmentation for RGB-D Data Using 3-D

Geometry Enhanced Superpixels

Jingyu Yang, Ziqiao Gan, Kun Li, Chunping Hou

Abstract

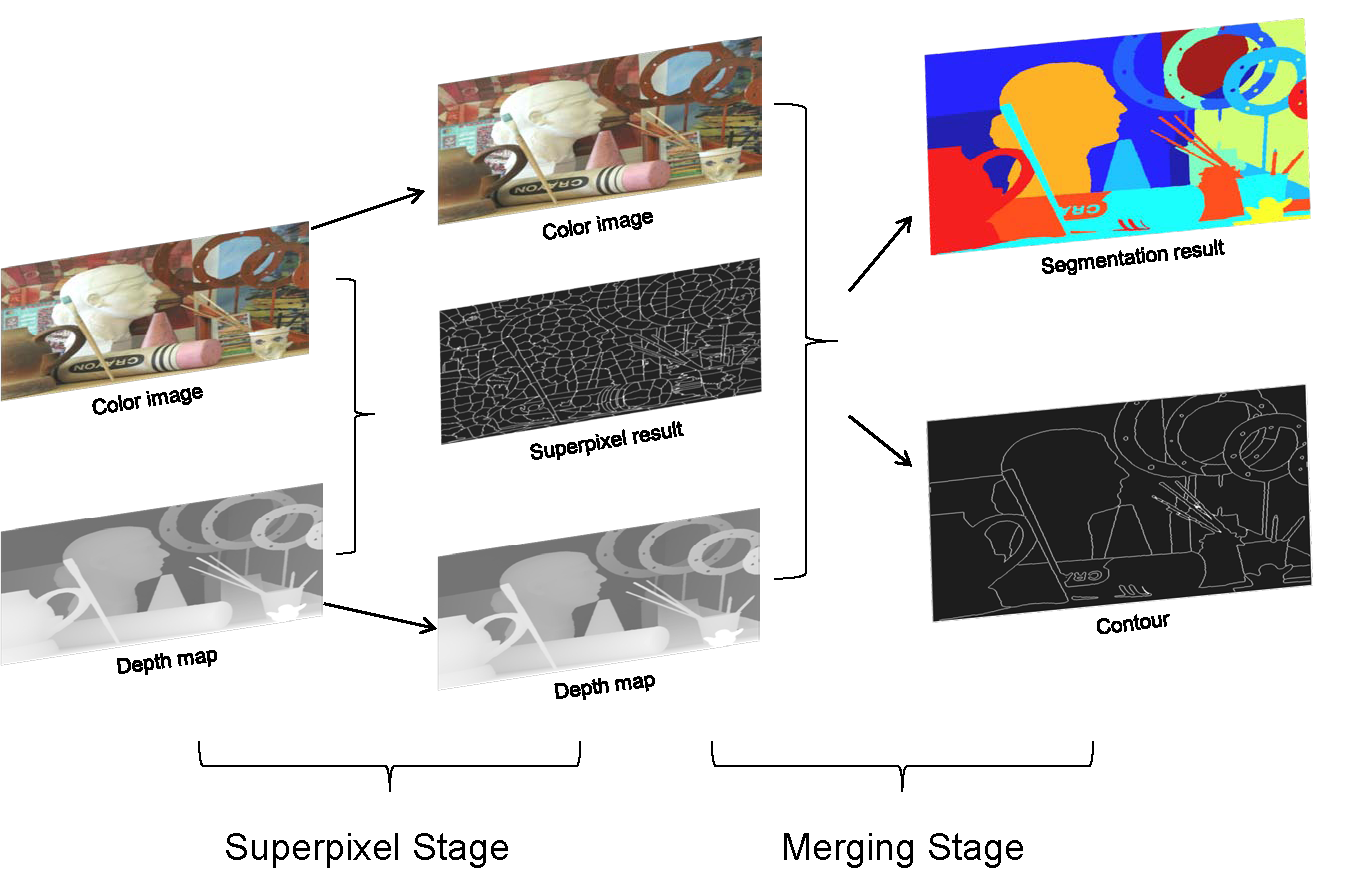

With the advances of depth sensing technologies, color image plus depth information (referred to as RGB-D data hereafter) are more and more popular for comprehensive description of 3-D scenes. This paper proposes a two-stage segmentation method for RGB-D data: 1) oversegmentation by 3-D geometry enhanced superpixels; and 2) graph-based merging with label cost from superpixels. In the oversegmentation stage, 3-D geometrical information is reconstructed from the accompanied depth map. Then, a K-means-like clustering method is applied on the RGB-D data for oversegmentation using an 8-D distance metric constructed from both color and 3-D geometrical information. In the merging stage, treating each superpixel as a node, a graph-based model is set up to relabel the superpixels into semantically-coherent segments. In the graph-based model, RGBD proximity, texture similarity, and boundary continuity are incorporated into the smoothness term to exploit the correlations of neighboring superpixels. To obtain a compact labeling, the label term is designed to penalize labels linking to similar superpixels that likely belong to the same object. Both the proposed 3-D geometry enhanced superpixel clustering method and the graph-based segmentation method from superpixels are evaluated by quantitative results and visual comparisons. By fusion the color and depth information, the proposed methods achieve superior segmentation performance over several state-ofthe-art algorithms.

Fig. 1. The block diagram of the proposed RGB-D segmentation method.

Evaluation on Superpixels

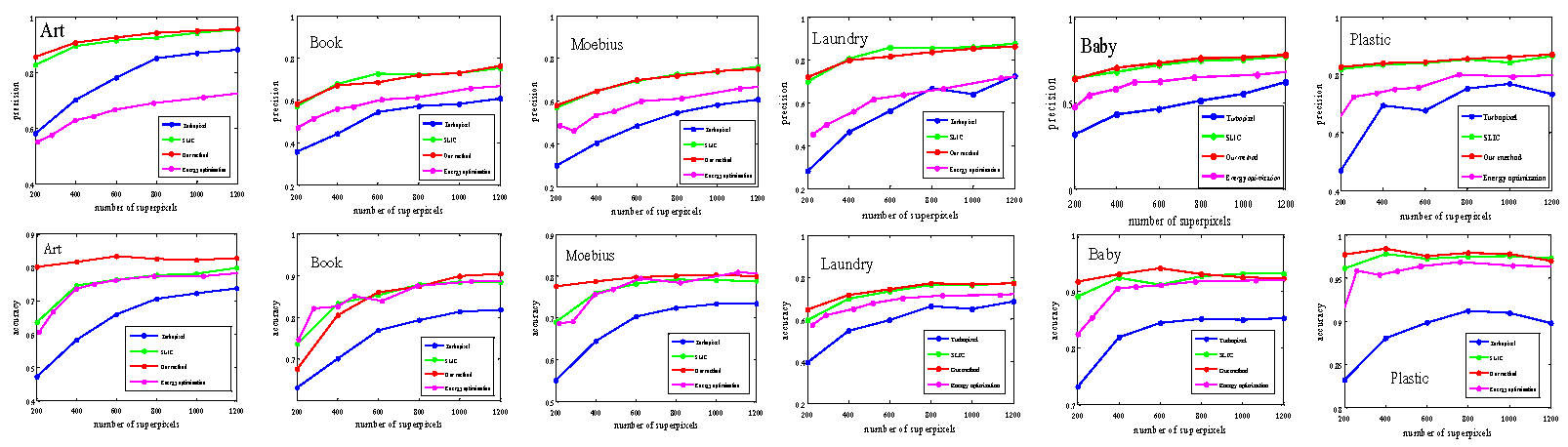

1. Quantitative Results for superpixels

Fig. 2. Evaluation in precision and accuracy of superpixels generated by Turbopixels [1], Energy optimization[2], SLIC [3], and the proposed method for RGB-D datasets [10],

Art, Books, Moebius, Laundry, Baby, and Plastic, from left to right. The top and bottom row present superpixel results in precision and accuracy, respectively.

2. Visual Comparison for superpixel results

Fig. 3. Visual comparison of superpixels for Art, Books, and Moebius. For each row, results from left to right

are obtained by Quick-shift [4], Turbopixels [1], Energy optimization [2], SLIC [3] and our method.

Click here to download all our segmentation results in Fig. 3.

Evaluation on Segmentation Results

1. Dataset with Ground Truth

Click here to download all the ground truth images in Fig. 4.

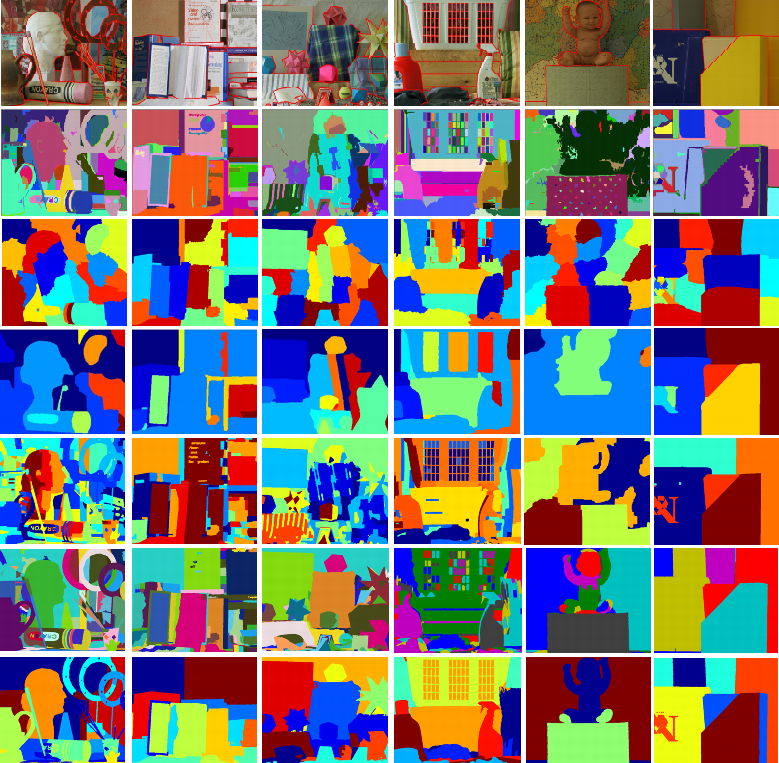

2. Comparison with RGB Segmentation Methods

Fig. 5. Segmentation results for Art, Books, Moebius, Laundry, Baby, and Plastic from top to bottom. For each row, from left to right present the ground-truth

segmentation, and the segmentation results produced by EG [5], MGD [6], CDHS [7], KGC [8], Ours_LM [9], and our method, respectively.

Click here to download all our segmentation results in Fig. 5.

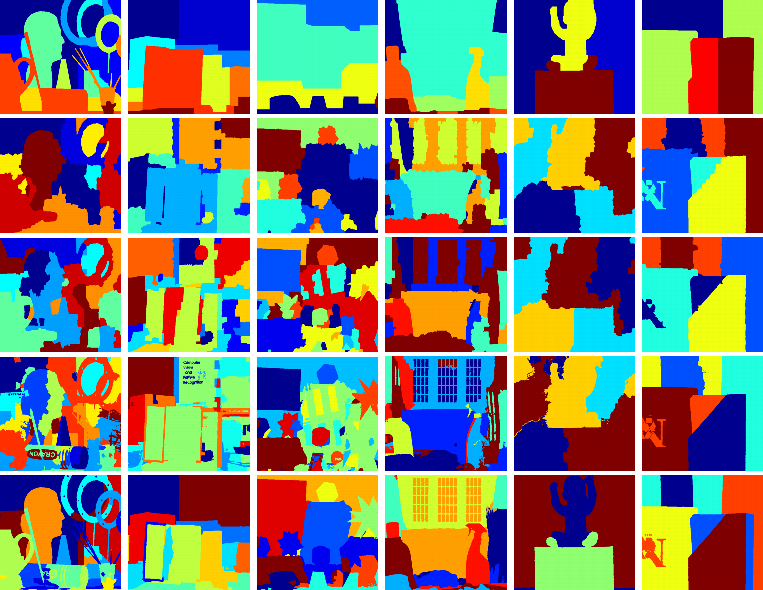

3. Graph-based Merging on Different Superpixels

Fig. 6. Comparison of our method on different input for Art, Books, Moebius, Laundry, Baby, and Plastic from left to right. For each column, from top to

bottom present the segmentation results produced by D_GC, Turbo_GC, EO_GC, SLIC_GC, and proposed method.

Click here to download all the segmentation results in Fig. 6.

1. Jingyu Yang, Ziqiao Gan, Kun Li, Chunping Hou, “Graph-based Segmentation for RGB-D Data Using 3-D Geometry Enhanced Superpixels”, IEEE Transactions on Cybernetics, In Press.

2. Jingyu Yang, Ziqiao Gan, Xiaolei Gui, Kun Li, Chunping Hou, “3-D geometry enhanced superpixels for RGB-D data,” in Advances in Multimedia Information Processing–PCM 2013,

pp. 35–46, 2013.

[1] A. Levinshtein, A. Stere, K. N. Kutulakos, D. J. Fleet, S. J. Dickinson, and K. Siddiqi, “Turbopixels: Fast superpixels using geometric flows,” IEEE TPAMI, vol. 31, no. 12, pp. 2290–2297, 2009.

[2] O. Veksler, Y. Boykov, and P. Mehrani, “Superpixels and supervoxels in an energy optimization framework,” in Proc. ECCV, pp. 211–224, 2010.

[3] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Susstrunk, “SLIC superpixels compared to state-of-the-art superpixel methods,” IEEE TPAMI, vol. 34, no. 11, pp. 2274–2282.

[4]A. Vedaldi and S. Soatto, “Quick shift and kernel methods for mode seeking,” Proc. ECCV, pp. 705–718, 2008.

[5] P. F. Felzenszwalb and D. P. Huttenlocher, “Efficient graph-based image segmentation,” Proc. IJCV, vol. 59, no. 2, pp. 167–181, 2004.

[6] T. Cour, F. Benezit, and J. Shi, “Spectral segmentation with multiscale graph decomposition,” in Proc. CVPR, vol. 2, pp. 1124–1131, 2005.

[7] P. Arbelaez, M. Maire, C. Fowlkes, and J. Malik, “Contour detection and hierarchical image segmentation,” IEEE TPAMI, vol. 33, no. 5, pp. 898– 916, 2011.

[8] M. B. Salah, A. Mitiche, and I. B. Ayed, “Multiregion image segmentation by parametric kernel graph cuts,” IEEE TIP, vol. 20, no. 2, pp. 545– 557, 2011.

[9] J. Yang, Z. Gan, X. Gui, K. Li, and C. Hou, “3-D geometry enhanced superpixels for RGB-D data,” in Advances in Multimedia Information Processing–PCM 2013, pp. 35–46, 2013.

[10] Middlebury Datasets, “http://vision.middlebury.edu/stereo/data/.”