Video Super-Resolution Using an Adaptive

Superpixel-Guided Auto-Regressive Model

Kun Li, Yanming Zhu, Jingyu Yang, Jianmin Jiang

Abstract

This paper proposes a video super-resolution method based on an adaptive superpixel-guided auto-regressive (AR) model. The key-frames are automatically selected and super-resolved by a sparse regression method. The non-key-frames are super-resolved by simultaneously exploiting the spatio-temporal correlations: the temporal correlation is exploited by an optical flow method while the spatial correlation is modelled by a superpixel-guided AR model. Experimental results show that the proposed method outperforms the existing benchmark in terms of both subjective visual quality and objective peak signal-to-noise ratio (PSNR). The running time of the proposed method is the shortest in comparison with the state-of-the-art methods, which makes the proposed method suitable for practical applications.

Fig. 1. Framework of the proposed method.

Downloads

Source code with datasets: download code here

It is based on VLFeat (http://www.vlfeat.org/).

Evaluation on Publicly Available Datasets

1. Quantitative Evaluation

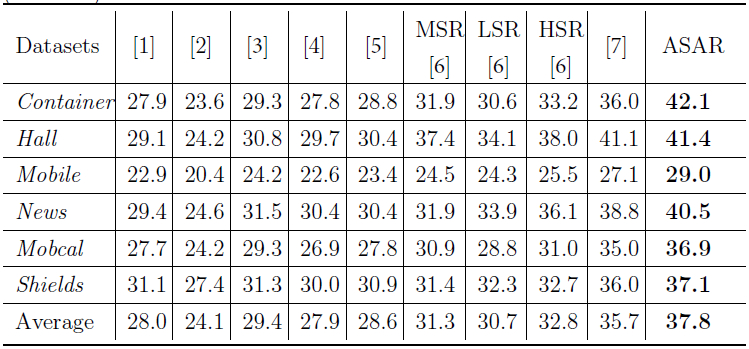

Table 1 : Quantitative evaluation for super-resolution performance of different algorithms (PSNR dB).

2. Qualitative Evaluation

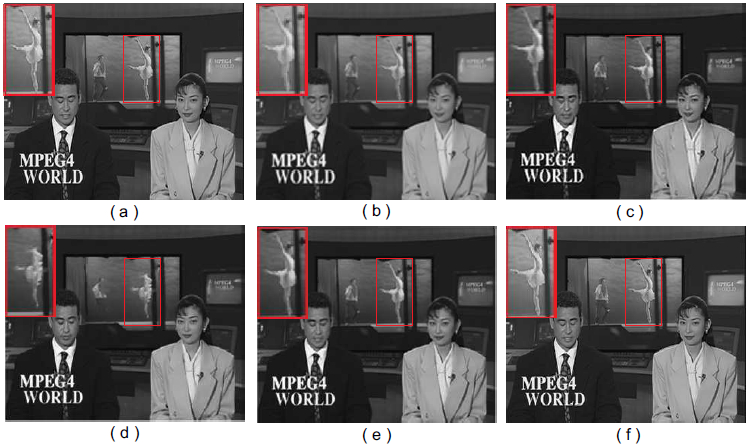

Fig. 2. Super-resolution results for News recovered by (b) Bi-cubic, (c) Method in Ref.[5], (d) MSR in Ref.[6],

(e) HSR in Ref.[6], and (f) Our method, compared with (a) Ground truth.

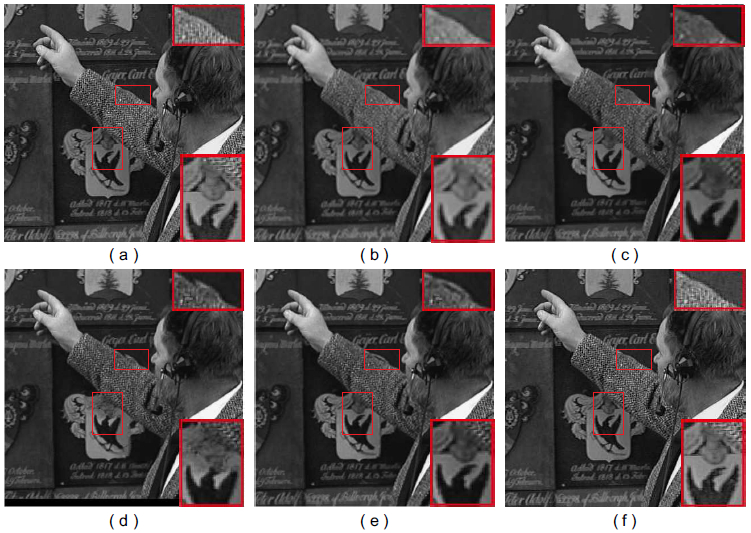

Fig. 3. Super-resolution results for Shields recovered by (b) Bi-cubic, (c) Method in Ref.[5], (d) MSR in Ref.[6],

(e) HSR in Ref.[6], and (f) Our method, compared with (a) Ground truth.

Evaluation on More Datasets

1.Datasets

2. Super-Resolution for Surveillance Video

Fig. 5. Super-resolution results of Surveillance sequence by magnifying 3 times.

Top row: the 1st frame result. Middle row: the 11st frame result. Bottom row: the 21st frame result.

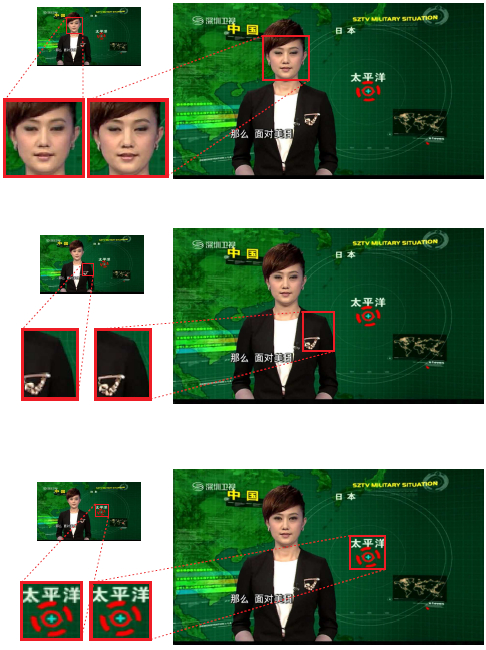

3. Super-Resolution for TV Video

Fig. 6. Super-resolution results of TV sequence by magnifying 3 times. Top row: the

2nd frame result. Middle row: the 3rd frame result. Bottom row: the 4th frame result.

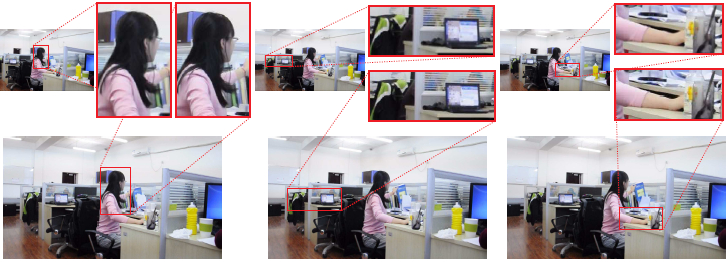

4. Super-Resolution for Lab Video

Fig. 7. Super-resolution results of Lab. From left to right: the 63rd frame result, the 65th frame result and the 67th frame result.

Evaluation on Running Time

Table 2: Running time of various algorithms

| Publication |

|

[1] H. How, H. Andrews, Cubic spline for image interpolation and digital filtering, IEEE Trans. Acoustics, Speech and Signal Processing 26 (5) (1978) 508–517.

[2] J. Yang, W. J., H. T.S., Y. Ma, Image super-resolution via sparse representation, IEEE Trans. Image Processing 19 (11) (2010) 2861–2873.

[3] S. Farsiu, M. D. Robinson, M. Elad, P. Milanfar, Fast and robust multiframe super resolution, IEEE Trans. Image processing 13 (10) (2004) 1327–1344.

[4] W. Fan, D. Y. Yeung, Image hallucination using neighbor embedding over visual primitive manifolds, in: Proc. IEEE Conf. Computer Vision and Pattern Recognition, 2007, pp. 1–7.

[5] F. Brandi, R. Queiroz, D.Mukherjee, Super-resolution of video using key frames and motion estimation, in: Proc. IEEE International Conference on Image Processing, 2008, pp. 321–324.

[6] B. C. Song, S.-C. Jeong, Y. Choi, Video super-resolution algorithm using bi-directional overlapped block motion compensation and on-the-fly dictionary training, IEEE Trans. Circuits and Systerms for Video Technology

21 (3) (2011) 274–285.

[7] E. M. Hung, R. L. de Queiroz, F. Brandi, Video super-resolution using codebooks derived from key-frames, IEEE Trans. Circuits and Systerms for Video Technology 22 (9) (2012) 1321–1331.